Text2BIM: An LLM-based Multi-Agent Framework Facilitating the Expression of Design Intentions more Intuitively

Building Information Modeling (BIM) is an all-encompassing method of representing built assets using geometric and semantic data. This data can be used throughout a building’s lifetime and shared in dedicated forms throughout project stakeholders. Current building information modeling (BIM) authoring software considers various design needs. Because of this unified strategy, the software now includes many features and tools, which has increased the complexity of the user interface. Translating design intents into complicated command flows to generate building models in the software may be challenging for designers, who often need substantial training to overcome the steep learning curve.

Recent research suggests that large language models (LLMs) can be used to produce wall features automatically. Advanced 3D generative models, such as Magic3D and DreamFusion, enable designers to convey their design intent in natural language rather than through laborious modeling commands; this is particularly useful in fields like virtual reality and game development. However, these Text-to-3D methods usually use implicit representations like Neural Radiance Fields (NeRFs) or voxels, which only have surface-level geometric data and do not include semantic information or model what the 3D objects could be inside. It is difficult to incorporate these completely geometric 3D shapes into BIM-based architectural design processes due to the discrepancies between native BIM models and these. It is difficult to employ these models in downstream building simulation, analysis, and maintenance jobs because of the lack of semantic information and because designers cannot directly change and amend the created contents in BIM authoring tools.

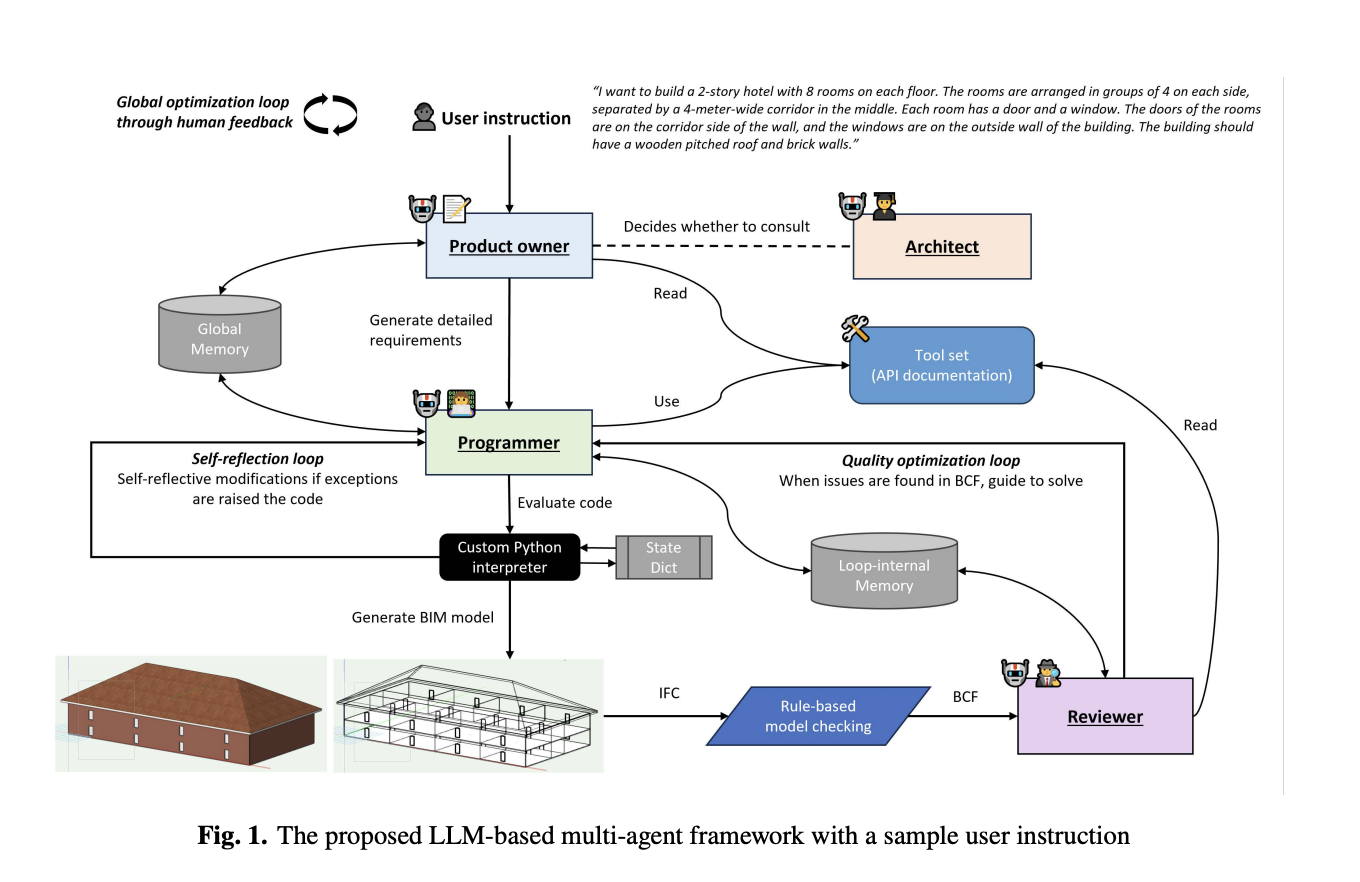

A new study by researchers at the Technical University of Munich introduces Text2BIM, a multi-agent architecture based on LLM. The team employs four LLM-based agents with specific jobs and abilities that communicate with one another via text to make the aforementioned central idea a reality. The Product Owner writes comprehensive requirements papers and improves user instructions, the professional architect develops textual construction plans based on architectural knowledge, the programmer analyzes requirements and codes for modeling, and the reviewer fixes problems with the model by suggesting ways to optimize the code. This collaborative approach ensures that the central idea of Text2BIM is realized effectively and efficiently.

LLMs may naturally think of the manually created tool functions as brief, high-level API interfaces. Due to the typically low-level and fine-grained nature of BIM authoring software’s native APIs, each tool encapsulates the logic of merging various callable API functions to accomplish its task. The tool can tackle modeling jobs precisely while avoiding low-level API calls’ complexity and tediousness by incorporating precise design criteria and engineering logic. However, it is not easy to construct generic tool functionalities to handle different building situations.

The researchers used quantitative and qualitative analysis approaches to determine which tool functions to incorporate to overcome this challenge. They started by looking at user log files to know which commands (tools) human designers use most often when working with BIM authoring software. They used a single day’s log data gathered from 1,000 anonymous users of the design program Vectorworks worldwide, which included about 25 million records in seven languages. The top fifty most used commands are retrieved once the raw data was cleaned and filtered, ensuring that the Text2BIM framework is designed with the user’s needs and preferences in mind.

To facilitate the development of agent-specific tool functionalities, they omitted commands primarily controlled by the mouse and, in orange, emphasized the chart’s generic modeling commands that are implementable via APIs. The researchers examined Vectorworks’ in-built graphical programming tool Marionette, comparable to Dynamo/Grasshopper. These visual scripting systems often offer encapsulated versions of the underlying APIs that are tuned to certain circumstances. The nodes or batteries that designers work with provide a more intuitive and higher-level programming interface. Software suppliers classify the default nodes according to their capabilities to facilitate designers’ comprehension and utilization. Having similar goal, the team used these nodes under the “BIM” category because the use case produces conventional BIM models.

The researchers could create an interactive software prototype based on the architecture by incorporating the suggested framework into Vectorworks, a BIM authoring tool. The open-source web palette plugin template from Vectorworks was the foundation for their implementation. Using Vue.js and a web environment built on Chromium Embedded Framework (CEF), a dynamic web interface was embedded in Vectorworks using modern frontend technologies. This allowed them to create a web palette that is easy to use and understand. Web palette logic is built using C++ functions, and the backend is a C++ application that allows asynchronous JavaScript functions to be defined and exposed within a web frame.

The evaluation is carried out using test user prompts (instructions) and comparing the output of different LLMs, such as GPT-4o, Mistral-Large-2, and Gemini-1.5-Pro. Furthermore, the framework’s capacity is tested to produce designs in open-ended contexts by purposefully omitting some construction constraints from the test prompts. To account for the random nature of generative models, they ran each test question through each LLM five times, yielding 391 IFC models (including optimization intermediate outcomes). The findings show that the method successfully creates building models that are well-structured and logically consistent with the user-specified abstract ideas.

This paper’s sole focus is generating regular building models during the early design stage. The produced models merely incorporate necessary structural elements like walls, slabs, roofs, doors, and windows and indicative semantic data such as narratives, locations, and material descriptions. This work facilitates an intuitive expression of design intent by freeing designers from the monotony of recurring modeling commands. The team believes the user may always go back into the BIM authoring tool and change the generated models, striking a balance between automation and technical autonomy.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.