Apple Researchers Introduce A Groundbreaking Artificial Intelligence Approach to Dense 3D Reconstruction from Dynamically-Posed RGB Images

[ad_1]

With learnt priors, RGB-only reconstruction with a monocular camera has made significant strides toward resolving the issues of low-texture areas and the inherent ambiguity of image-based reconstruction. Practical solutions for real-time execution have garnered considerable attention, as they are essential for interactive applications on mobile devices. Nevertheless, a crucial prerequisite yet to be considered in current cutting-edge reconstruction systems is that a successful approach must be both online and real-time.

To operate online, an algorithm must generate precise incremental reconstructions during picture capture, relying solely on historical and current observations at every time interval. This issue breaks an important premise of previous efforts: each view has an exact, fully optimized posture estimate. Rather, pose drift occurs in a simultaneous localization and mapping (SLAM) system under real-world scanning conditions, leading to a stream of dynamic pose estimations. Previous poses are updated due to pose graph optimization and loop closure. Such posture updates from SLAM are common in online scanning.

As shown in Figure 1, the reconstruction must maintain its agreement with the SLAM system by honouring these changes. However, recent efforts on dense RGB-only reconstruction have yet to address the dynamic character of camera pose estimations in online applications. Despite significant advancements in reconstruction quality, these initiatives have not explicitly addressed dynamic poses and have maintained the conventional-issue formulation of statically-posed input pictures. On the other hand, they concede that these updates exist and provide a way to integrate posture update management into current RGB-only techniques.

Figure 1: Pose data from a SLAM system (a, b) may be updated (c, red-green) in live 3D reconstruction. Our posture update management technique generates globally consistent and accurate reconstructions, whereas ignoring these changes results in incorrect geometry.

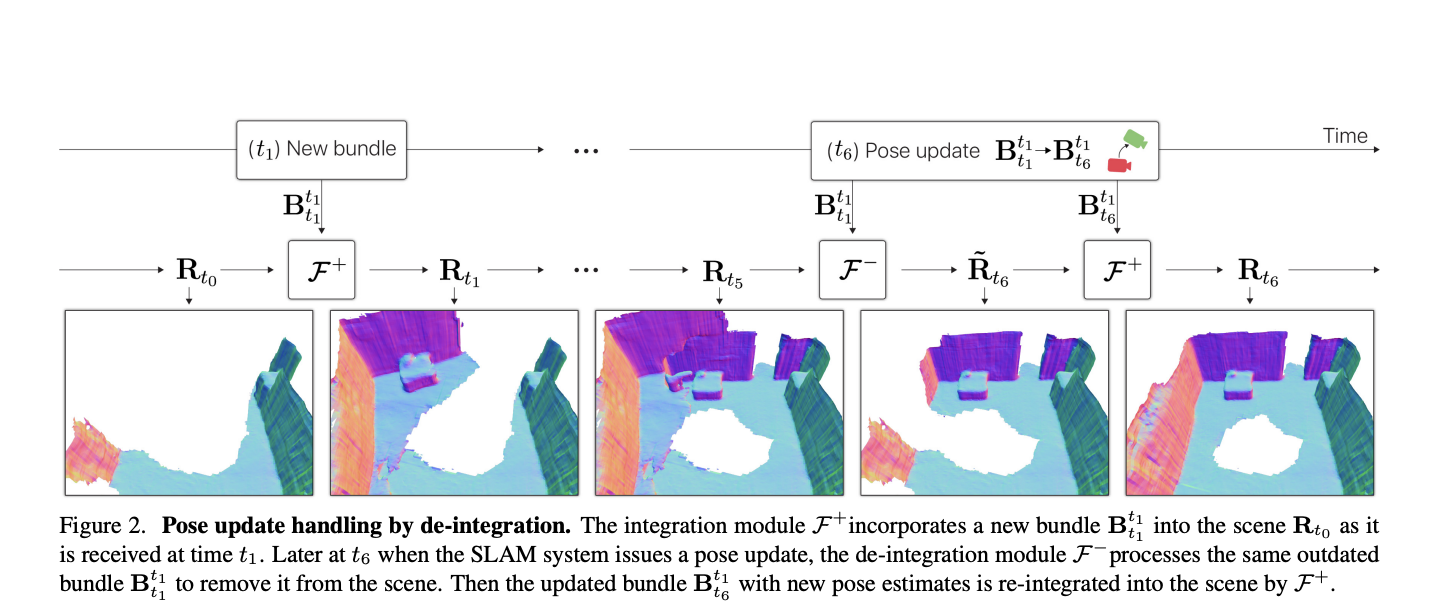

They are influenced by BundleFusion, an RGB-D technique that uses a linear update algorithm to integrate new views into the scene. This allows for the de-integration of older views and their re-integration upon the availability of an updated position. This study suggests managing posture changes in live reconstruction from RGB pictures using de-integration as a generic framework. Three sample RGB-only reconstruction techniques with static posture assumptions are studied. To overcome the constraints of each approach in the online scenario.

Specifically, researchers from Apple and the University of California, Santa Barbara provide a unique deep learning-based non-linear de-integration technique to facilitate online reconstruction for techniques like NeuralRecon, which relies on a learned non-linear updating rule. They present a fresh and unique dataset called LivePose, which contains entire, dynamic posture sequences for ScanNet, built using BundleFusion, to verify this technology and facilitate future study. The efficacy of the de-integration strategy is exhibited in tests, which reveal qualitative and quantitative improvements in three cutting-edge systems about important reconstruction measures. Engagements.

Their principal contributions are: • They provide and define a novel vision job that more closely mimics the real-world environment for mobile interactive applications: dense online 3D reconstruction from dynamically-posed RGB pictures. • They released LivePose, the first dynamic SLAM posture estimate dataset made accessible to the public. It includes the whole SLAM pose stream for each of the 1,613 scans in the ScanNet dataset. • To facilitate rebuilding with dynamic postures, they create innovative training and assessment methods. • They suggest a unique recurrent de-integration module that eliminates outdated scene material to enable dynamic-position handling for techniques with learnt, recurrent view integration. This module teaches how to manage pose changes.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 32k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on Telegram and WhatsApp.

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.

[ad_2]

Source link