Beyond the Fitzpatrick Scale: This AI Paper From Sony Introduces a Multidimensional Approach to Assess Skin Color Bias in Computer Vision

[ad_1]

Discrimination based on color continues to persist in many societies worldwide despite the progress in civil rights and social justice movements. They can harm individuals, communities, and society as a whole. These effects can manifest in various aspects of life, including psychological, social, economic, and health-related consequences. Efforts to address discrimination based on color should involve comprehensive strategies that promote equity, inclusion, and diversity.

Can you even imagine that machine learning algorithms can also discriminate based on classes like race and gender? Recent studies by the researchers at Sony AI and the University of Tokyo have shown that these models can produce wrong skin lesion (abnormal change in skin structure ) diagnostics or incorrect heart rate measurements for individuals with darker skin tones. Most computer vision models use the Fitzpatrick Skin Type scale to determine appropriate treatments for various skin conditions and recommend sun protection measures. They say solely depending upon these scales will produce adverse decisions.

Describing the apparent skin color perception remains an open challenge as the final visual color perception results from complex physical and biological phenomena. The multilayered skin varies among individuals, as some have different amounts and distributions of carotene, hemoglobin, and melanin throughout these layers. Fitzpatrick skin scales are designed based on the spectrophotometer measurements of skin reflectance from the annotation process, and misclassification can occur as present skin types are not objective and descriptive enough.

Researchers use Colorimetry to derive quantitative metrics to overcome these limitations, enabling more reliable skin color scores. Based on the CIC established standard regarding illuminants and tristimulus values, they use the standard RGB space to view the images. To obtain a more comprehensive assessment of skin color, they use the Hue angle to describe the perceived gradation of color.

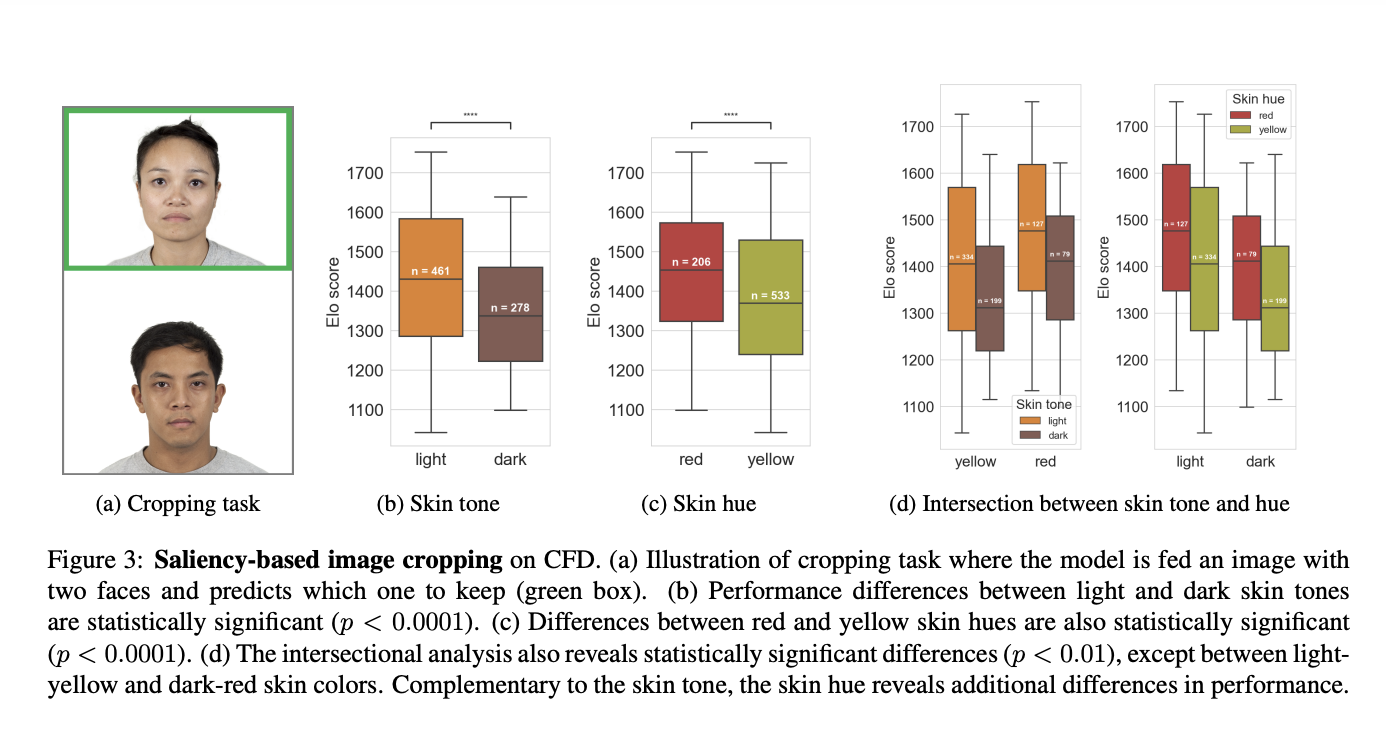

They first quantified the skin color bias in face datasets and generative models trained on such datasets. They then broke down the results by the skin color of saliency-based image cropping and face verification algorithms. Instead of using uni-dimensional skin color scores to decide the fairness benchmarking, they use multidimensional skin color scores. They generated about 10,000 images with generative adversarial network and diffusion models for the datasets.

Their multidimensional skin color scale offers a more representative assessment to surface socially relevant biases due to skin color effects in computer vision. This would enhance the diversity in the data collection process by encouraging specifications to represent skin color variability better and improve the identification of dataset and model biases by highlighting their limitations and leading to fairness-aware training methods.

Check out the Paper and Sony Article. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

![]()

Arshad is an intern at MarktechPost. He is currently pursuing his Int. MSc Physics from the Indian Institute of Technology Kharagpur. Understanding things to the fundamental level leads to new discoveries which lead to advancement in technology. He is passionate about understanding the nature fundamentally with the help of tools like mathematical models, ML models and AI.

[ad_2]

Source link