University Hospital of Basel Unveils TotalSegmentator: A Deep Learning Segmentation Model that can Automatically Segment Major Anatomical Structures in Body CT Images

[ad_1]

The number of CT scans performed and the data processing capacity available have grown over the past several years. Thanks to developments in deep learning approaches, the capability of image analysis algorithms has been greatly enhanced. As a result of improvements in data storage, processing speed, and algorithm quality, larger samples have been used in radiological research. Segmentation of anatomical structures is crucial to many of these investigations. Radiological image segmentation can be used for advanced biomarker extraction, automatic pathology detection, and tumor load quantification. Segmentation is already utilized in common clinical analysis for purposes like surgery and radiation planning.

Separate models exist for segmenting individual organs (such as the pancreas, spleen, colon, or lung) on CT images, and research has also been done on combining data from multiple anatomical structures into a single model. However, all previous models include only a small subset of essential anatomical structures and are trained on tiny datasets not representative of routine clinical imaging. The lack of accessibility to many segmentation models and datasets severely limits their usefulness to researchers. Access to publicly available datasets often necessitates lengthy paperwork or requires the use of data providers that are either cumbersome to work with or rate-limited.

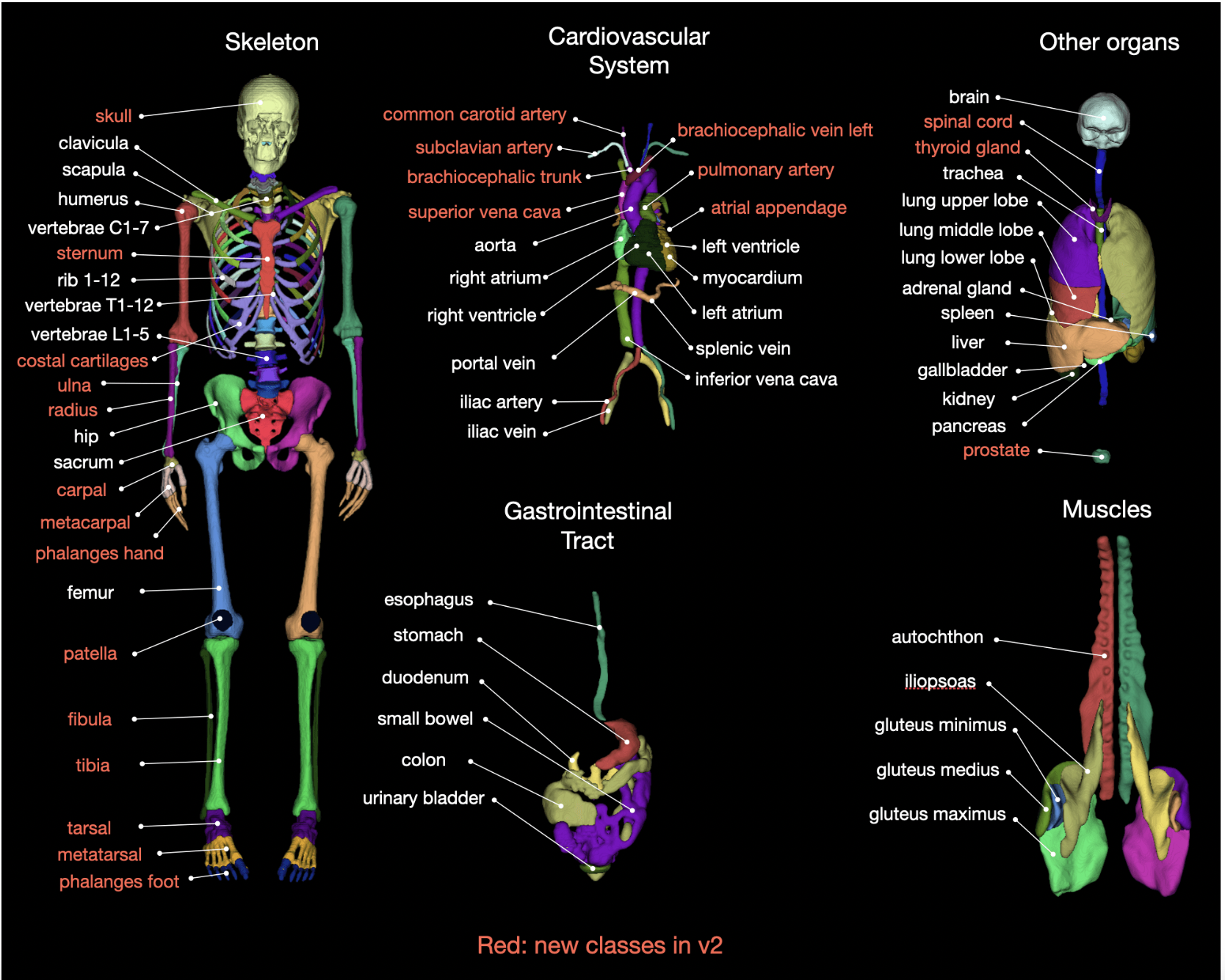

Researchers at the Clinic of Radiology and Nuclear Medicine, University Hospital Basel, used around 1204 CT datasets to create a method for segmenting 104 anatomical entities. They acquired the dataset with CT scanners, acquisition settings, and contrast phases. Their model, TotalSegmentator, can segment most of the body’s anatomically important structures with minimal user input, and it does so reliably in any clinical environment. High accuracy (Dice score of 0.943) and robustness on various clinical data sets make this tool superior to others freely available online. The team also used a huge dataset of over 4000 CT examinations to examine and report age-related changes in volume and attenuation in various organs.

The researchers have made their model available as a pre-trained Python package so anyone can use it. They highlight that since their model uses less than 12 GB of RAM and a GPU is unnecessary, it can be run on any standard computer. Their dataset is also easily accessible, requiring no special permissions or requests to download it. The current research used a nnU-Net-based model because it has been proven to produce reliable results across various tasks. It is now considered the gold standard for medical picture segmentation, surpassing most other approaches. Hyperparameter adjustment and the investigation of different models, such as transformers, enhance the performance of the standard nnU-Net.

As mentioned in their paper, the proposed model has various possible uses. In addition to its obvious surgical applications, quick and easily accessible organ segmentation enables individual dosimetry, as demonstrated for the liver and kidneys. Additionally, automated segmentation can improve research by providing clinicians with normal or even age-dependent parameters (HU, volume, etc.). In conjunction with a lesion-detection model, their model might be utilized to approximate tumor load for a given body part. Furthermore, the model can serve as a foundation for developing models designed to identify various diseases.

The model has been downloaded by over 4,500 researchers for use in various contexts. Only recently was analyzing data sets of this size possible, and it took a lot of time and effort from data scientists. This work has demonstrated associations between age 12 and the volume of numerous segmented organs using a dataset of over 4000 individuals who had undergone a CT polytrauma scan. Common literature figures for normal organ sizes and age-dependent organ growth are typically based on sample sizes of a few hundred people.

The team highlights that male patients were overrepresented in the study datasets, which may be because more men than women visit hospitals on average. Nevertheless, the team believes their model can be a starting point for more extensive investigations of radiology populations. They mention that future studies will include more anatomical structures in their dataset and model. In addition, they are recruiting additional patients, adjusting for potential confounders, and conducting further correlation analyses to conduct a more comprehensive study of aging.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on Telegram and WhatsApp.

![]()

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.

[ad_2]

Source link